Help Your IDE Help You

With the capabilities of AI coding assistance snowballing over the last year, a whole new cohort of people are now able to conceive of and build their own software.

Anthropic's Artifacts and ChatGPT's Canvas have enabled everyday people to speak software into existence (granted, not terribly sophisticated nor easily deployable software). And a whole slew of AI-powered development environments have steadily emerged, enabling those same people (alongside regular developers) to build more fully-featured software and in some cases deploy it to the world. This includes tools and platforms such as Cursor, Windsurf, Bolt, v0, Replit Agent, Cody, Aider and more.

Designing and building software is still complex though. There are so many details to consider. Decisions to make. It's a lot of work to chart a path to success.

If you're not a programmer, you probably have no idea what those details and decisions could even be. If you are a programmer, you probably know, but you're unlikely to develop a thorough and robust plan ahead of time. And you'll undoubtedly miss some things, which will lead to roadblocks and refactors down the line.

"Whether you're working by yourself or working with a team, to 'understand the project' means to 'understand the what, why, and how'."

Now that AI is in the mix, it'll figure out the details along the way, right? I would say no. Not with our current methods of working with them.

The LLMs in these new environments don't truly know what we're building. They currently need to infer the intent and goals of our projects, whether through examining our codebases or through the incremental implementations of new features and fixes. But they still don't totally get it.

The code they write is only partially informed, and so is the code we write. We need a better way to help both humans and AI understand the projects they're building together.

So how might we plan, map out, and document all the important details and decisions in our projects, giving ourselves, our teammates, and our AI counterparts the context they need in order to build software more effectively?

First there was flow

I've been working with IDEs (integrated development environments) with AI-augmentation since January 2023 via the GitHub Copilot extension for VS Code. The immediate acceleration in development productivity was both significant and surprising. I honestly felt bad for developers who either didn't have access to these capabilities or outright dismissed them because they didn't write perfect code.

The smart auto-complete nature of GitHub Copilot anticipated my next thoughts in ways that kept me in the flow, which as we all know is a critical part of any type of skill-based work or activity.

Being in a flow state feels good. Making forward progress feels good. Learning while you do it feels good. Even if the LLMs that enabled these auto-complete features (GPT 3.5 at the time) weren't writing perfect code, they kept me in a flow state. This meant that programming felt good. And that was a huge momentum-maintainer which enabled me to build my ideas faster. The productivity gains were real. Most of the time, my experience was one that felt akin to the LLM reading my mind, which was absolutely bonkers.

But this was just the beginning of what it meant to program with AI inside of your development environment.

Then there was partnership

Shortly after I started using GitHub Copilot, I tried out a program called Cursor. It's a fork of VS Code with a whole bunch of really capable AI features baked in.

There was a feature originally called Copilot++ (now called Cursor Tab) which was basically GitHub Copilot's autocomplete, but better. There was a feature for inline edits; hit Command+K, type a few instructions for what you need it to do, and it would write out the code, showing you diffs between your old code and the newly generated code. And finally, there was a feature that let you chat with an LLM directly in a side panel in the IDE. Basically ChatGPT right next to your code. You could work with it to write much longer blocks of code and apply it to the file you were working on.

These features gave me superpowers. I fell in love with programming all over again because I suddenly felt like I could accomplish anything. This was because OpenAI had just introduced GPT-4, and Cursor supported that model as one that you could chat with. So we had a state-of-the-art LLM that was proven to be exceptional at code generation, which meant that my ignorance was shored up by this expert developer-y thing.

Throughout the year, Cursor fundamentally changed the way I programmed. I could tell it to look at certain files when writing code (i.e. stuffing the necessary information into its context), and it would give me informed answers and solutions. I could even reference documentation from other libraries, which was also added to its context. This upped the code quality immensely.

"I find that most people don't recognize just how powerful and capable these models are because they don't understand the importance of supplying models with ample context."

Context matters

In 2023, LLMs had limited context windows (the short-term memory of LLMs). GPT-4, the premier state-of-the-art model, had an ~8,000 token context window, so I couldn't just dump an entire codebase plus additional documentation of 3rd party libraries into the model. I had to be very selective in what I brought to its attention, which meant a narrower focus on the problems we were collaborating on.

But context matters so much when working with LLMs. The more context (i.e. information) they have about your problem or question or task, the better they'll perform. This is true today as well. I find that most people don't recognize just how powerful and capable these models are because they don't understand the importance of supplying models with ample context.

Context isn't only important for LLMs. As a human developer, having sufficient context about the project you're working on is hugely important when it comes to how successful you'll be with your contributions. And how quickly you'll get to "finished". This is especially the case when working with a team of developers, designers, project managers, product managers, etc. Writing good code is a direct result of how well you understand the project, not just your development skills.

Understanding the project

Whether you're working by yourself or working with a team, to "understand the project" means to "understand the what, why, and how".

- The what: a definition of the thing you're making and its goals (i.e. what you're trying to achieve)

- The why: what problems are being solved and for whom (i.e. the reason this thing needs to exist)

- The how: all the details and decisions that involve UX, technologies, and process (i.e. the plan and best practices)

Right now, when working with AI-powered development tools, there's no elegant or clearly defined way for helping the LLM understand these things.

"The code written by the AI isn't good enough because it doesn't truly understand my project."

There have been multiple home-grown, manual attempts to provide AI-powered editors with more context about your project so that its outputs are higher quality and there's less of a need to infer the best code to write for the task at hand.

The solution folks have arrived at is effectively: Give the LLM text files that you can include in your conversational context to give them meta information about your project.

Here are some examples of ideas that attempt to solve this project context problem that have popped up over the last couple months:

- Context Boundary - Nov 24

- CONVENTIONS.md - Sept 9

- AI Codex - Sept 6

Prior to even seeing those, I had a task on my to-do list to

"Add a .cursorrules for my project so that it knows what I'm

trying to do."

If you use Cursor, there's a special project-specific file you can add

called .cursorrules, which is

basically some meta information that will be included in every prompt

that goes to the LLM (i.e. all new Chats and Composer sessions). It

has almost zero documentation, but people usually use it to help the

LLM understand important details about the project. Things like the

tech stack or coding guidelines.

All of these methods attempt to solve some aspect of the same problem: The code written by the AI isn't good enough because it doesn't truly understand my project.

The same could probably be said about teammates that join a project mid-flight. They don't truly understand a project.

Because I've been in ideate-and-prototype mode for the past 5 months, I'm starting new projects often. I find myself working with Claude to help me with my experiments, assisting me with tech stack choices and feature definitions and strategic clarity. It's a haphazard process with conclusions and decisions spread across multiple chat sessions and Notion documents.

But for now, I want to figure out how to consolidate the bulk of that project planning — all those definitions, decisions, and documentation — into a cohesive and formalized whole. I want to understand what the heck it is I'm building. And I want my AI partner to understand it as well. I also want to understand exactly what documentation is helpful for both the human and the AI when working in concert.

Developing project understanding

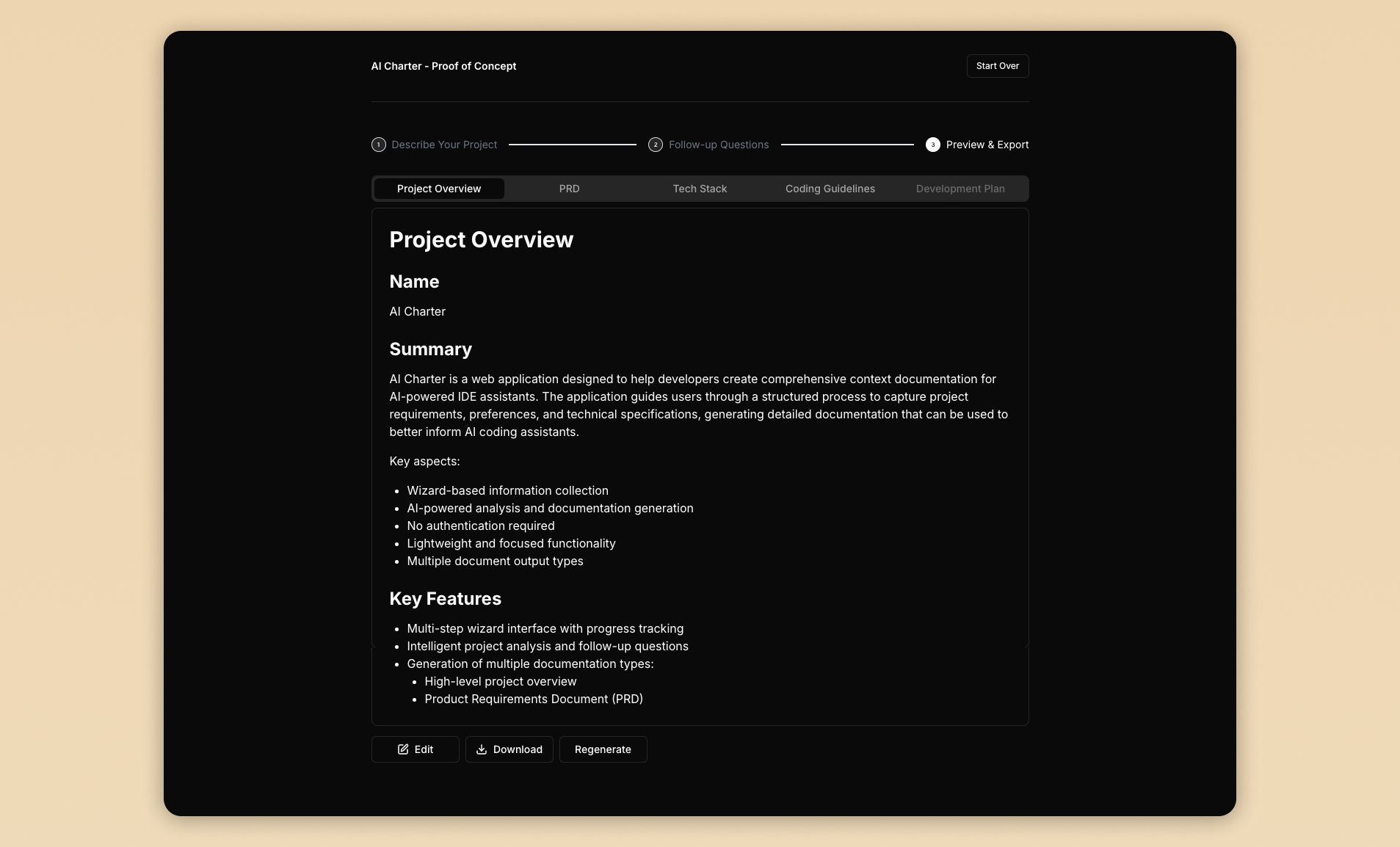

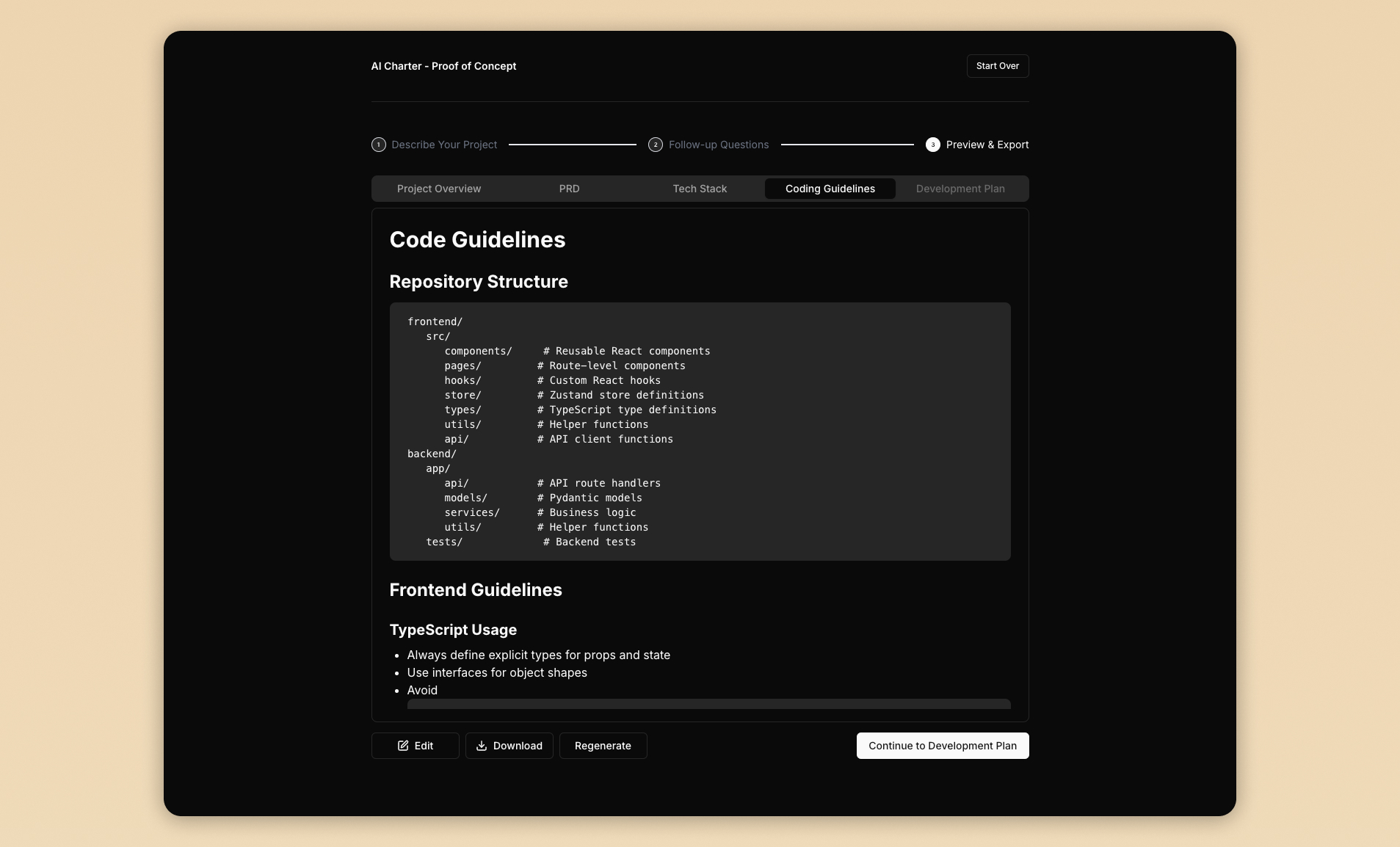

So this leads me to today. I'm building a product to help us all understand the projects we're building. It will work with you to create a product charter, a PRD, a tech stack definition, a set of coding guidelines, and a development plan for bringing your project to fruition.

Moreover, I'm building the plane while flying it. I'm testing the project planning documentation that will be created by the software (prototyped through iterations with Anthropic's Workbench) to see if it can actually help the AI in my IDE more effectively build this product with me.

To restate that: I'm using my software to help build itself.

I've intentionally selected a tech stack I'm unfamiliar with to see if I can be largely hands-off and let the LLM use its understanding of the project to incrementally bring itself to life.

And it's working.

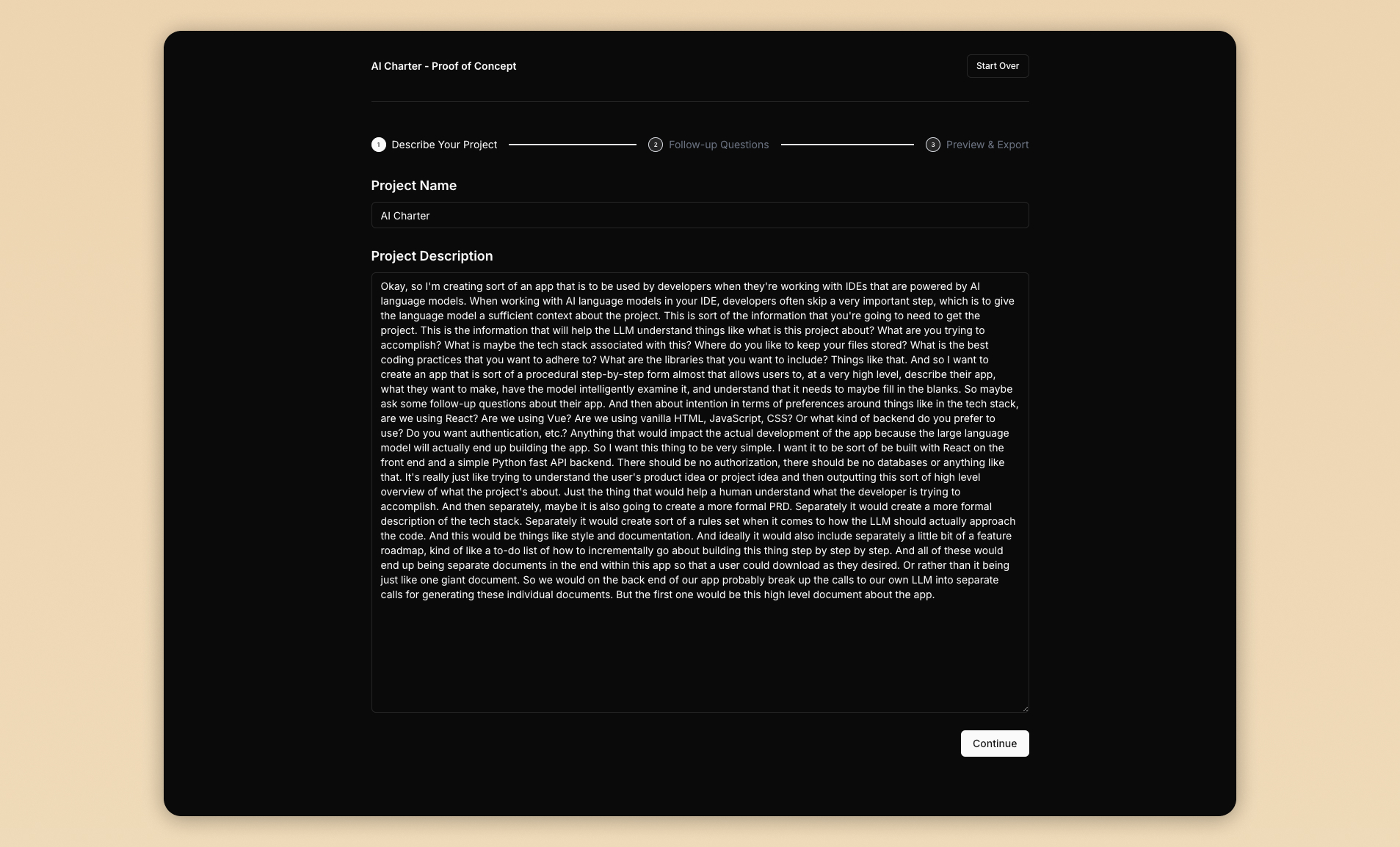

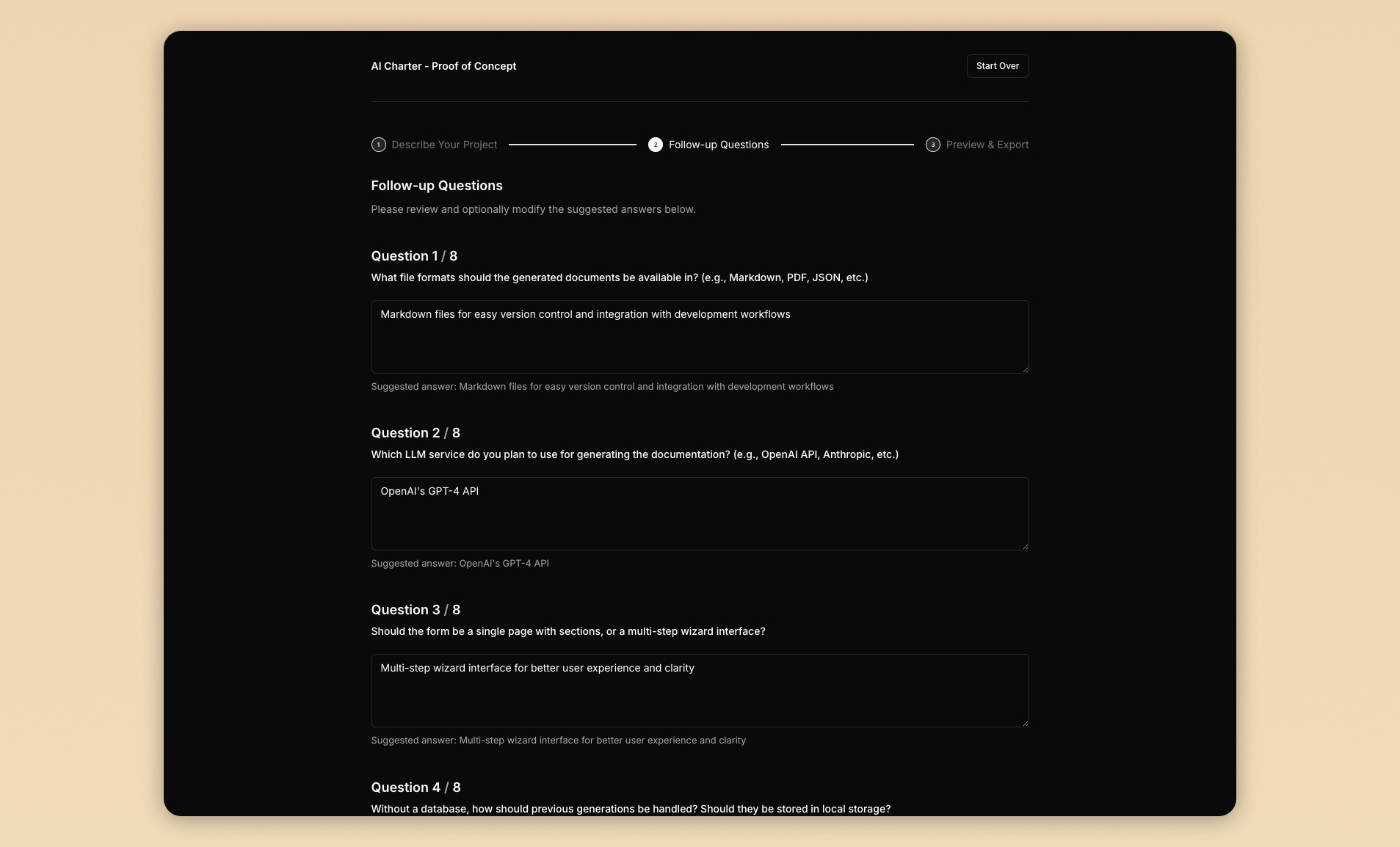

The preliminary UX works like this:

- The user uses mouth words to describe their project at length and transcribe it.

- The LLM analyzes it and asks meaningful follow-up questions.

- The LLM generates drafts of the documents, allowing the user to make edits with each document generated (because there's a dependency chain).

- The user downloads the documentation.

- The user adds the documentation to their project and uses it as context for their AI-powered IDE.

Here are some screenshots of the first iteration:

This is my proof-of-concept, and I can consider the concept proven. Lots of insights have surfaced along the way, which is wonderful for a 2-day sprint. I'm now able to proceed with building the real thing (which unfortunately requires a port because I had it build itself with React, and I don't like React).

One key insight: this product shouldn't strive to fully automate the project planning process. The decisions made during this initial step in kicking off a project are crticial. Users will want agency. And if they don't (due to lack of technological know-how), they'll at least want to understand what decisions are being made and why. So, I feel it's incredibly important to inject intentional friction into the project planning process — I feel this way about all AI-augmented software and tasks. This should feel like co-creation, and it should simultaneously allow the user to override a recommendation as well as learn more about it, serving programmers and non-programmers alike.

I don't know what this actually looks like yet. I have a lot of unanswered questions at this point, so I'll be venturing into some new UX territory.

Stop-gaps

The future of software creation involves AI. It's a no-brainer. We're going to be collaborating with it, so it's best we take steps to create a shared understanding of what it is we're collaborating on.

We're still in the infancy of these AI-powered development tools, though. Bringing this detailed project planning into your IDE of choice is going to be cumbersome (but at least it'll be in your codebase). However, this sort of project planning documentation in general isn't going away. Humans still need it in order to be effective and achieve their goals. And LLMs will need it too. It's the mechanisms for sharing this information with each other that will evolve over time.

While we should always be creating this sort of documentation for ourselves, it takes a lot of time and effort, and we humans are not good at thinking through all the details. Years of being a technical director has shown me that. But that's where LLMs can come into play and fill in the gaps.

They can help us understand our projects. Then we, in turn, can help them understand our projects. It's a win-win where we can just focus on making stuff together.