My $40 Vibe Coding Reality Check

Today, I saw no fewer than 5 threads on X by folks proclaiming they built an entire web app just by talking to their computer.

No coding. No debugging. Just vibes.

AI researcher and practitioner Andrej Karpathy recently coined the term "vibe coding" to describe this approach:

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper…

— Andrej Karpathy (@karpathy) February 2, 2025

These demos make it look like anyone can build software now. No need to understand or write code. Just describe what you want, and let AI handle the messy details.

But is that really true? Is AI so good that we can actually "forget the code exists" and still build valuable software? (Spoiler: the answer is no.)

But how far can it take us? And can your business benefit from at least some aspects of vibe coding?

I wanted to find out.

Anthropic dropped Claude Code on the world, and its UX is geared toward speaking software into existence. So I decided to put it and vibe coding to the test by building a complete interactive mystery game.

I tracked every step, every roadblock, and every dollar spent. I wanted to see how far I could get before I inevitably cut myself on a coding agent's jagged edges.

What I found was a fascinating mix of genuine breakthroughs and stubborn limitations that every organization should understand before diving in.

The short version? Agentic tools in software development are transformative, but developer expertise matters more than ever.

The Beginning of the $40 Experiment

My experiment was straightforward: build something using Claude Code, documenting the process along the way. I wanted to see how far vibe coding could take me with minimal intervention (i.e. no touching or looking at code).

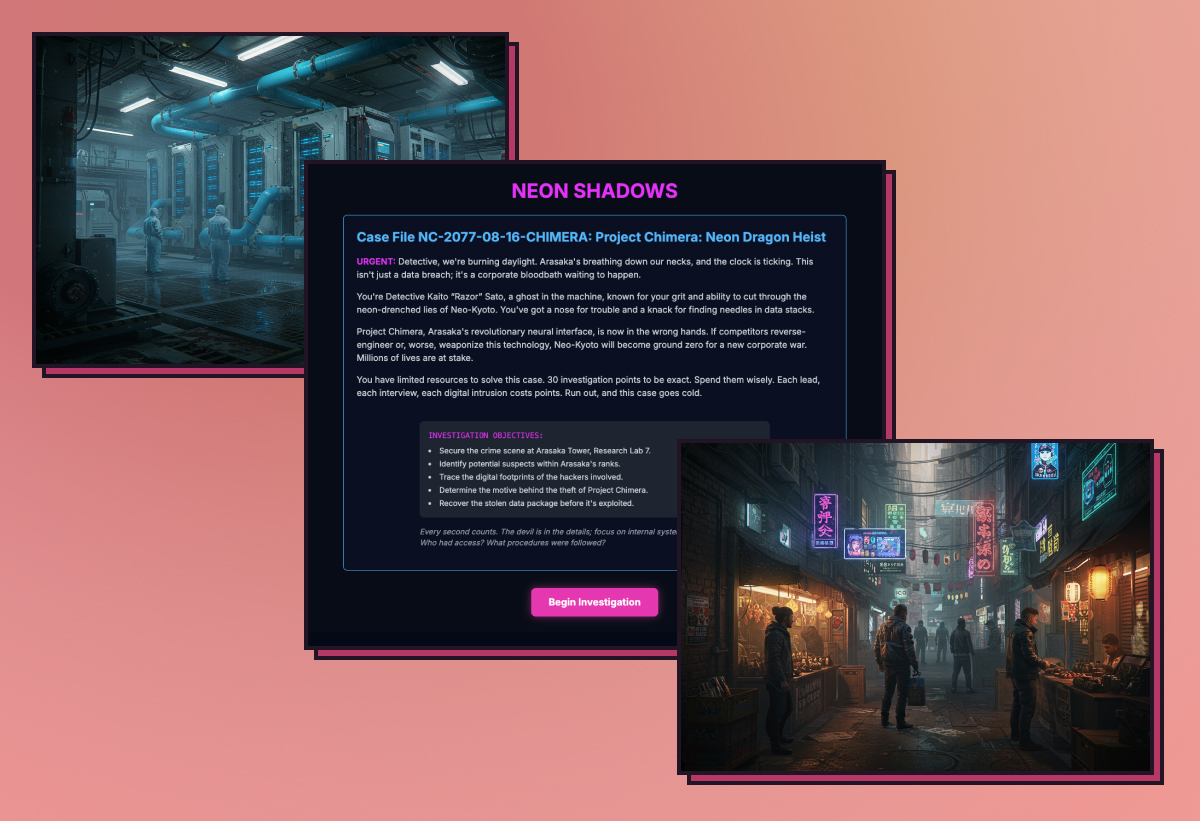

But the project had to be a real-world problem. Not a clone of some website or app. I needed to build something novel — something not similar to anything in its training data. So I decided to revisit an idea I had for a cyberpunk mystery game. Sort of a crossover between Carmen Sandiego and Neuromancer. But everything is procedurally generated by an LLM, and all the characters you interact with are roleplayed by an LLM.

With most vibe coding sessions you read about, the author just starts by casually telling the LLM their idea. I wanted to give Claude Code the best possible chance of success, so I started with a detailed spec created by my own AI tool, PathDrafter.

At first, the progress was exhilarating. Claude generated a complete application structure, built a working frontend and backend, and implemented core game mechanics in a handful of hours instead of days.

For $15 in API costs, I got what would have taken me at least 20 hours to build manually. The economics seem like a no-brainer.

But that's not the whole story.

Jagged Edge #1: The Doom Loop

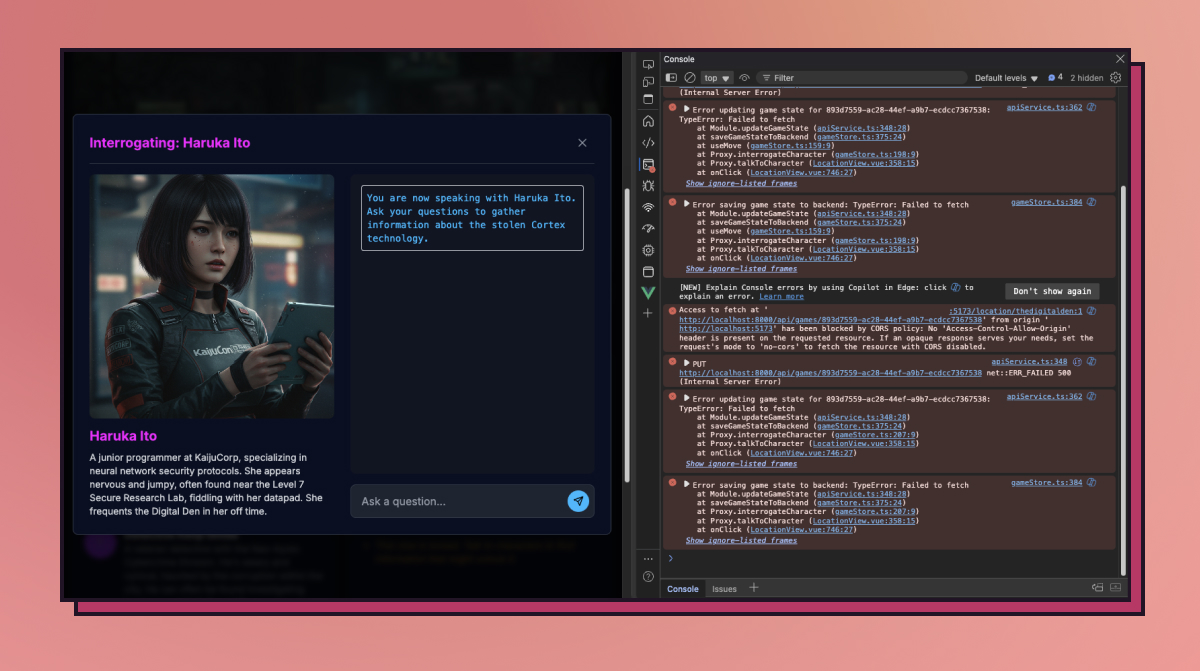

A couple hours into my first day's session, I hit a wall. Claude was attempting to implement a feature (that I had neither specified nor asked for), and something wasn't working right. I tried telling Claude to ignore it, but it wouldn't let go.

What happened next revealed a fundamental limitation of current AI coding tools: when things break, AI's instinct isn't to simplify or delete — it's to add more code.

An observation from my journal:

The AI was trapped in something that felt adjacent to mode collapse — solving problems by generating increasingly complex solutions rather than stepping back to reconsider the approach. It had tunnel vision.

As an experienced developer, I eventually identified the issue. It was a simple syntax error, but finding it required reading through the code line by line with the eye of someone who understands how the entire tech stack works. And Claude had written a lot of code up to this point, so this wasn't a trivial task.

A non-developer would have been completely stuck. And this would probably be the end of the project.

Jagged Edge #2: The Invisible Knowledge Requirements

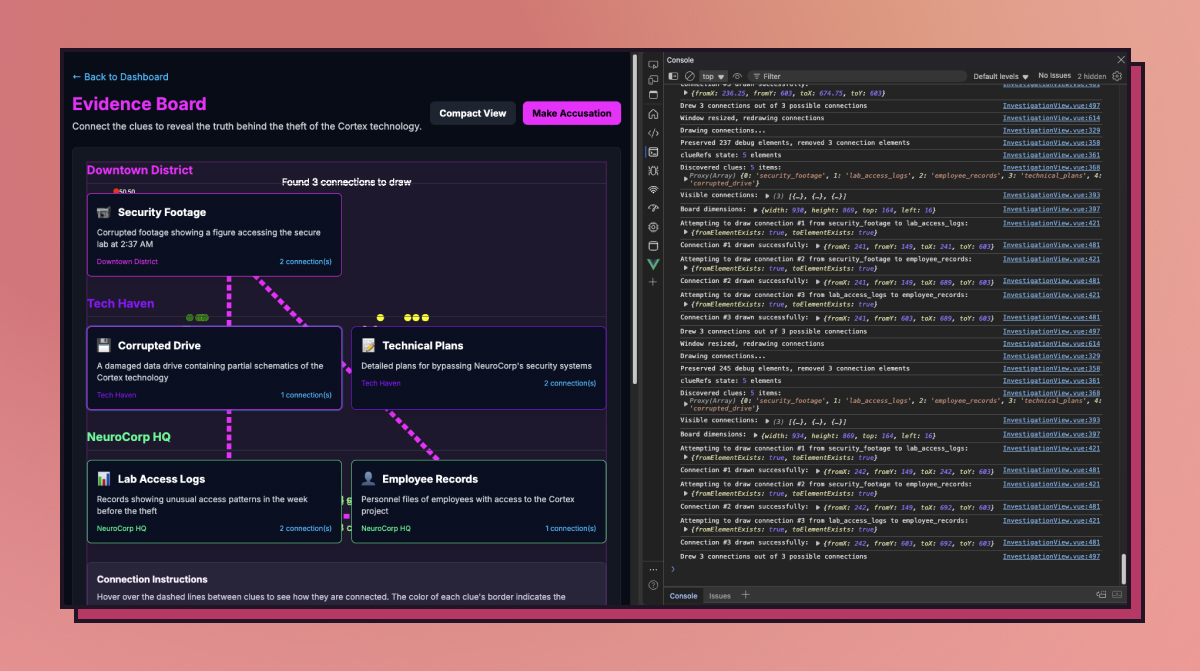

I decided to continue though, recognizing that I would have to intervene here and there. We had gotten pretty far, so I moved forward with more implementation using Claude Code. This time taking more care in reviewing all of its work.

Throughout the experiment, I kept bumping into moments where my developer experience was essential:

- Setting up the Python virtual environment (which Claude didn't explain)

- Configuring a new version of a component library (which Claude couldn't debug)

- Identifying data type mismatches between frontend and backend

- Understanding when and how to refactor hardcoded game data

- Troubleshooting tooling configurations during a JavaScript-to-TypeScript migration

None of these steps required genius-level programming, but they all required the foundational knowledge that developers accumulate over years of practice. These are the gotchas that'll trip up non-coders, stopping them in their tracks.

Something I noted at the end of this experiment:

Even when you tell the coding agent the errors or problems being reported, knowledge cut-offs, system dependencies, and poor codebase design can easily send the LLMs down multiple dead-ends, unable to recover.

Jagged Edge #3: Context Management Challenges

Another significant hurdle was managing the LLM's context window. A context window is an LLM's short-term working memory. There's a limit to how much information it can hold at once. Even with Claude 3.7's massive context window of 128,000 tokens (about 96,000 words), I repeatedly hit limits mid-task:

Managing your context is a new kind of skill on its own. It's something you get a feel for after working with both LLMs and coding agents for a while. Stripping away information in your LLM's context window mid-task could mean introducing new mistakes or retracing your steps.

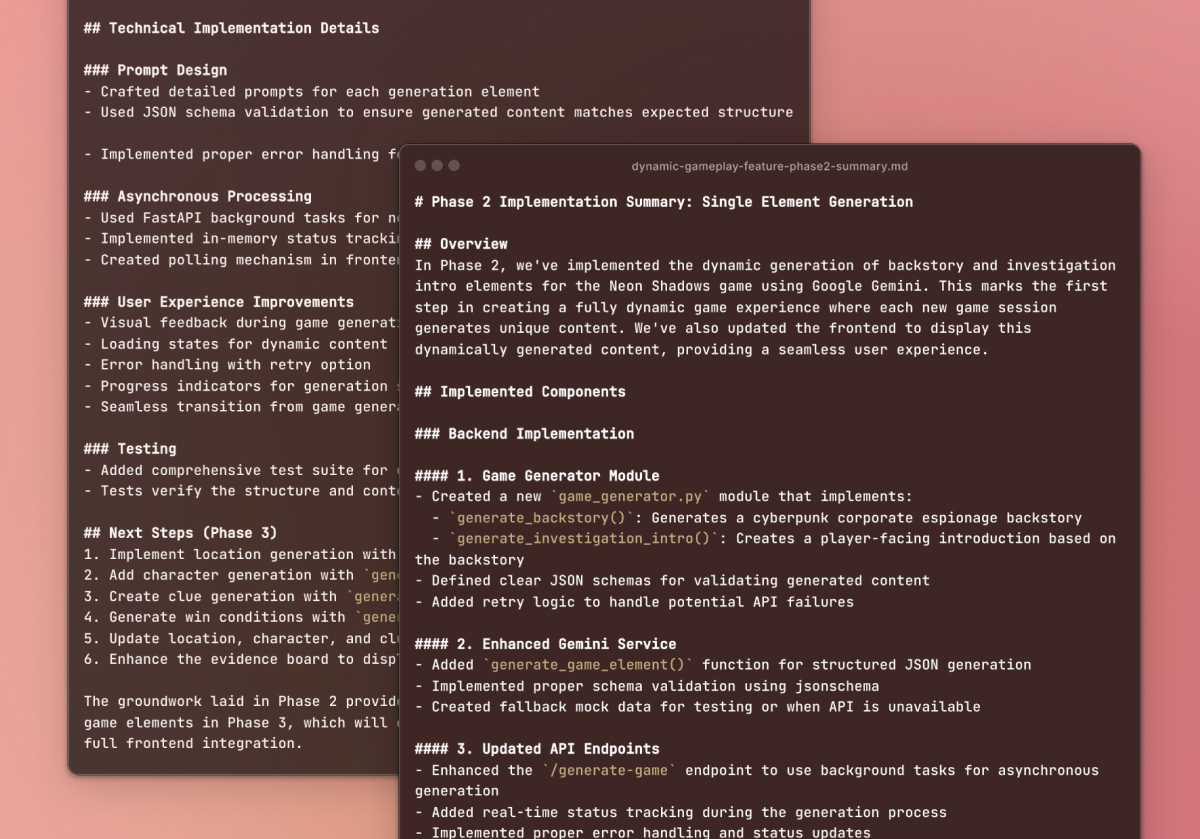

I developed workarounds — having Claude Code save summaries of its work to files, breaking tasks into manageable phases suitable for Claude's context window size, compacting context between phases — but these all required understanding how LLMs and Claude Code work.

A non-developer simply wouldn't know to do this.

Finding the “Right Tension”

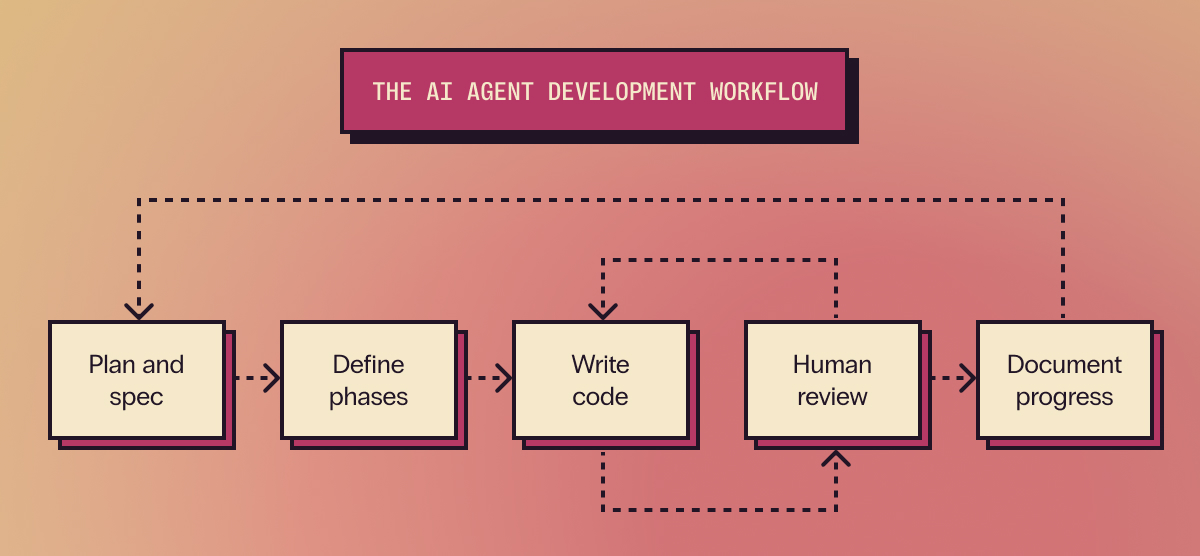

By day five, I'd discovered what I think is the optimal approach to AI-augmented development: striking a balance between "lean back" and "lean forward" coding.

When I let Claude handle everything ("lean back" mode), I felt too disconnected from the project. Ask Claude to do something, refresh the browser, click around, report any errors, wait for the next update, repeat. This is full-on vibe coding.

When I did most of the work manually, with Claude assisting on smaller tasks ("lean forward" mode), it felt more like a slog, with progress feeling much slower. It's a slightly enhanced version of traditional programming.

The sweet spot was collaborative planning followed by phased implementation with regular human review and edits:

This approach maintained progress, ownership, and engagement — the perfect balance of AI acceleration and human direction.

Where Development Expertise Makes the Difference

The most important insight from my experiment was that strategic planning and documentation become even more critical when working with AI.

Starting with a clear specification (which was created with PathDrafter) changed everything. It gave Claude the context it needed to build coherently and gave me a reference point for evaluating its work.

Developer expertise wasn't about writing every line of code. It was about:

- Creating a clear roadmap before coding begins

- Recognizing when to intervene

- Understanding the architecture and how components should fit together

- Sizing features appropriately with clear definition

- Identifying the root causes of issues quickly

These "softer" skills can't be replaced by AI today. Instead, they become more valuable alongside it.

The New Development Workflow

Based on my experiment, I've developed a framework for working with AI coding agents that balances efficiency with quality:

- Collaborative planning and specification

- Breaking work into context-sized phases

- Writing code scoped to these phases

- Human review at critical junctures

- Clear documentation of AI decisions and progress

This approach is particularly valuable for new projects. Development teams building from scratch (like those at creative agencies or contract engineering studios) can use it to meet their aggressive timelines. Digital product teams can produce working prototypes in record time, ready for testing with users. Instead of spending weeks on initial implementation, you can produce a functional first version in just days (or even hours).

But there's an important ROI calculation to make. The $40 I spent on API costs was negligible compared to the development time saved. However, I still needed to spend a non-trivial amount of time understanding and directing the process. The economics work when you have developer expertise guiding the AI — not when you try to eliminate that expertise entirely.

Beyond Rapid Replication: Finding Real Value in AI Development

Most of what you're seeing in viral "vibe coding" demos is just rapid replication. It's AI recreating familiar patterns with minor variations. Someone builds an Airbnb clone or a product landing page by speaking to their computer, and while that's impressive technically, it creates very little actual business value.

Beyond that, there are the realities of bringing software to production — deployment infrastructure, CI/CD pipelines, scaling, testing, observability, and ongoing feature implementation.

The hype around vibe coding shouldn't signal that it's time to replace your developers with AI. Quite the opposite.

It's a signal that it's time to dedicate resources to ongoing experimentation with these AI advances within your existing development team. The organizations seeing the most value from AI aren't those replacing engineers with agents — they're the ones equipping their teams with AI tools while maintaining and growing the human expertise necessary to guide those tools effectively.

Real innovation requires thoughtful implementation — using AI to enhance human capabilities rather than replace them. It demands developers who understand both the technology and your business goals, who can orchestrate and collaborate with AI tools to build solutions that genuinely advance your organization.

For companies looking to move beyond the hype cycle, I've found three approaches particularly valuable:

First, establish structured AI experimentation within your organization. These tools are evolving almost daily, and you need a systematic way to test capabilities, identify opportunities, and separate competitive advantages from passing novelties.

Second, develop clear solution definitions and roadmaps before and during implementation. As my experiment showed, upfront and continual planning dramatically improves both the quality and efficiency of AI-assisted development.

Finally, use rapid prototyping to validate concepts quickly. The $40 experiment approach I used here isn't just for blog posts. It's a powerful way to test ideas and explore AI advancements before committing significant resources.

What's Your Next Move?

The gap is widening between teams using AI as a novelty and those deploying it strategically. The winners aren't replacing developers with AI; they're finding that sweet spot where AI acceleration meets developer craft.

The approach that works isn't mysterious: clear specifications, phased implementation, and knowing when humans need to take the wheel. It's about orchestration, not automation.

I'm here to help teams implement exactly this balance, turning the AI coding hype into actual business outcomes. If you're ready to move beyond viral Tweets to hands-on application, reach out for an initial consultation. Your developers (and your timeline) will thank you for it.